OmniGPT’s Massive Data Leak: 30,000 Emails, 34 Million Messages

While OpenAI’s systems remain uncompromised, a new breach has surfaced, affecting OmniGPT.co—a lesser-known ChatGPT alternative.

The Truth Behind the "ChatGPT Hack" Claims

In the last days, the cybersecurity community has been buzzing with alarming headlines about a so-called "ChatGPT data breach." A widely circulated post on underground forums claimed that a threat actor had stolen 20 million OpenAI account credentials, offering them for sale at a nominal price. However, as is often the case in the cybercrime ecosystem, the reality is far different from the sensationalized claims.

The alleged "breach" was, in fact, another case of stealer logs being marketed as a large-scale compromise. Instead of a direct breach of OpenAI’s systems, these credentials were most likely harvested through infostealer malware deployed on compromised devices. Infostealers like RedLine, Raccoon, and Vidar are commonly used by cybercriminals to extract saved credentials from infected systems, later repackaging and reselling them under the guise of a large-scale hack. This pattern has been seen before, with previous "hacks" of platforms such as Netflix, Facebook, and LinkedIn often being nothing more than aggregated logs from compromised users.

The Difference Between a Breach and Stealer Logs

A genuine data breach occurs when an attacker successfully infiltrates a system, extracting sensitive information directly from the target’s infrastructure. This often results from security misconfigurations, zero-day vulnerabilities, or credential stuffing attacks. On the other hand, stealer logs contain credentials stolen from individual users’ devices, often obtained through phishing campaigns, malicious browser extensions, or Trojanized software downloads. While both pose security risks, the implications of a direct database breach are far more severe, as they indicate flaws in the organization’s security posture.

Unlike a stealer log dump, a direct database breach means the attacker had access to user communications, stored information, and potentially proprietary data—something far more damaging than a simple credential theft. And that brings us to the latest, more concerning case: OmniGPT.

OmniGPT’s Massive Data Leak: 30,000 Emails, 34 Million Messages

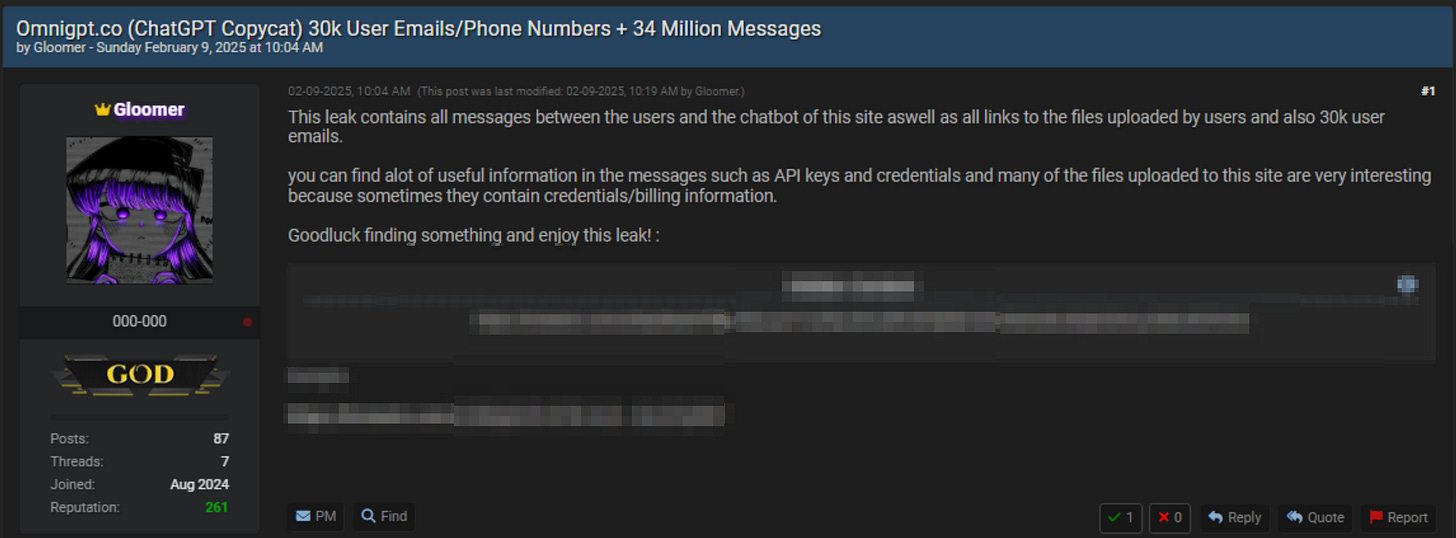

While OpenAI’s systems remain uncompromised, a new breach has surfaced, affecting OmniGPT.co—a lesser-known ChatGPT alternative. A hacker operating under the alias "Gloomer" recently posted on a cybercrime forum, offering a database containing:

30,000 user emails and phone numbers

34 million messages exchanged between users and the chatbot

Files uploaded by users, potentially containing sensitive information

The leaked messages, according to the threat actor, may contain API keys, credentials, and billing information—an alarming prospect considering the growing reliance on AI chatbots for professional and personal use.

Unlike the misleading OpenAI breach claims, this OmniGPT leak appears to be legitimate. However, the exact method of compromise remains unknown. Possible explanations include a misconfigured database, an exposed API endpoint, or an insider threat. Given that the breach contains both user communications and metadata, it is likely that the attacker gained full access to OmniGPT’s backend systems.

Implications and Lessons

This breach serves as yet another reminder that AI-driven platforms, particularly third-party alternatives to mainstream models, are becoming attractive targets for cybercriminals. Users should be especially cautious when entering sensitive information into these platforms, as their security standards may not match those of established companies like OpenAI or Google.

Additionally, organizations must enforce strict data protection measures, including:

Proper encryption of stored user data

Regular security audits and penetration testing

Restricting API access to authorized users

Implementing multi-factor authentication (MFA) for administrative access

While OpenAI users can breathe easy knowing the recent claims were exaggerated, OmniGPT users should take immediate precautions, such as changing associated credentials and reviewing any sensitive data they may have shared.

As AI adoption continues to accelerate, so too will the cybersecurity threats against these platforms. Staying informed and vigilant is no longer optional—it’s a necessity.