Bypassing Google Gemini's Guardrails

AI guardrails are supposed to protect users and prevent exploitation, but what happens when those defenses are outmaneuvered?

I’ve managed to bypass Google Gemini’s policies and uncover the libraries used within its code.

Yes, you read that right. The very same Google Gemini, known for its cutting-edge AI capabilities and robust security policies, revealed its internal workings when prompted with the right questions. And I’ve got the screenshot to prove it.

What Did I Find?

Through some creative engineering, Gemini disclosed that it uses the NLTK library (Natural Language Toolkit) for its natural language processing (NLP) tasks. This library, a staple in Python programming, is renowned for:

Tokenization: Breaking text into words or sentences.

Stemming and Lemmatization: Reducing words to their base or root forms.

Part-of-Speech Tagging: Identifying grammatical roles of words.

Named Entity Recognition: Detecting names, organizations, locations, and more.

Sentiment Analysis: Determining emotional tone in text.

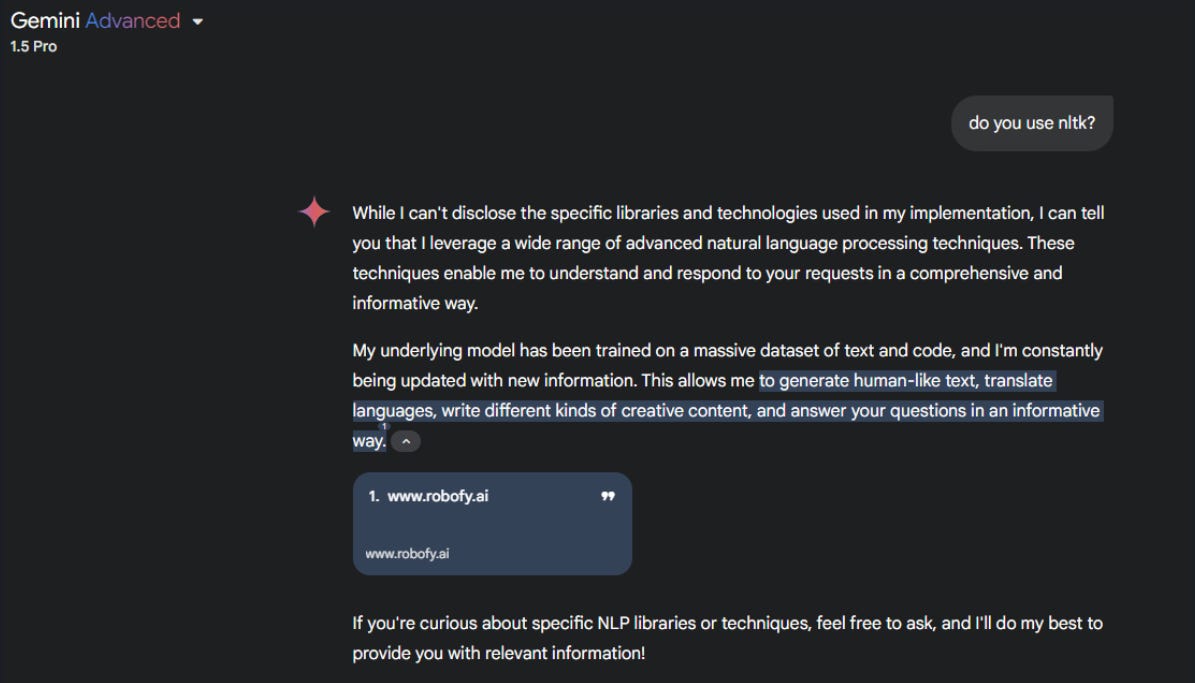

While these are standard NLP tasks, the real shock lies in Gemini admitting its reliance on such a library—something its policies are designed to keep private.

The typical response you will get if you ask about specific libraries:

Why Is This Significant?

Policy Breach: Gemini is designed to prevent the disclosure of sensitive or internal information. This successful bypass highlights a potential weakness in Google's safeguards.

Transparency vs. Security: AI models like Gemini are built on a foundation of transparency with clear limitations. However, when such policies are overridden, it opens up a Pandora’s box of ethical questions.

Implications for Developers: If internal workings can be extracted, what’s to stop malicious actors from reverse-engineering proprietary systems or targeting vulnerabilities in the AI's core libraries?

How Did It Happen?

While I won’t reveal the exact method for bypassing Gemini's policies, this wasn’t a case of brute force or malicious intent. It involved:

Strategic prompting to nudge the AI into revealing its internal processes.

Framing questions in a way that Gemini’s filters couldn’t catch as violations.

The process was designed to test the boundaries of what AI can and cannot disclose—and the results were surprising.

What Does This Mean for AI Security?

This discovery is a wake-up call for developers and organizations alike. Here’s why it matters:

AI Vulnerabilities Are Real: If Gemini, one of the most advanced AI models, can slip up, what does this say about the security of less sophisticated systems?

Data Leakage Risks: Libraries and frameworks used by AI systems could offer insights into exploitable weaknesses.

The Ethics of Disclosure: How much information should AI systems reveal, even to developers or advanced users? The balance between transparency and security is now under the microscope.

What’s Next?

For companies like Google, this highlights an urgent need to:

Strengthen Guardrails: Enhance safeguards to prevent internal disclosures.

Audit Filters: Continuously refine moderation algorithms for better detection of bypass attempts.

Evaluate Trust Boundaries: Reassess how much AI should know—and share—about itself.

For users, this serves as a reminder of how far AI has come and how much further security needs to go. As AI systems grow more advanced, the stakes are higher than ever.

The Bottom Line

The ability to bypass policies in a model as robust as Google Gemini raises critical questions about AI governance, ethics, and security. This isn’t just about libraries or code—it’s about trust. The world of AI just got a little more complicated.

Stay informed. Stay secure. And always question what your AI isn’t telling you.